AI-powered error solutions for your Laravel apps

While working on What The Diff - an AI powered code review assistant (written in PHP with Laravel) I learned a lot about OpenAI and how to come up with good prompts.

I recently had the pleasure of giving a talk about this topic at Laracon EU in Lisbon.

Among other things, I demoed how you can make use of the OpenAI API and use it to try and provide solutions for your Laravel and PHP exceptions.

Let's take a look at the implementation and how you can make use of this in your own applications.

Prerequisites

In order to make API calls to OpenAI, you will need to sign up for an OpenAI account and create an API key. We will use this API key later on to connect our Laravel application to the API itself.

Be aware that while creating an account at OpenAI is free, invoking the AI models is not. You pay "per use". Well what does that mean?

OpenAI provides several different AI models. Such as "davinci", "curie", "babbage", ... These models come with various different prices. Davinci is by far the most capable model, and it's pricing looks like this:

$0.02 / 1000 tokens

Now what's a token?

The text that you send to OpenAI - and the one that you get generated - will be tokenized. Based on these tokens you will be charged. As a rule of thumb, you can say that roughly 3-4 characters of english text add up to 1 token.

At the end of this blog post, we'll make API calls that produce on average ~400-500 tokens per request. This means that invoking this model (and showing the AI generated solution) will cost you roughly $0.01 per exception.

You do get $18 in free credit though, so there's plenty of room to explore this.

Adding the OpenAI client to your Laravel application

In order to communicate with OpenAI, we will need to make use of their API. Thankfully, there's already an existing open-source package that we can use in our application that will make working with the API a lot easier.

To install the package, run the following command:

composer require openai-php/laravel

Next, publish the configuration file:

php artisan vendor:publish --provider="OpenAI\Laravel\ServiceProvider"

Now all you need to do is add your OpenAI API key to your .env file:

OPENAI_API_KEY=YOUR-KEY-HERE

Adding solutions to Ignition

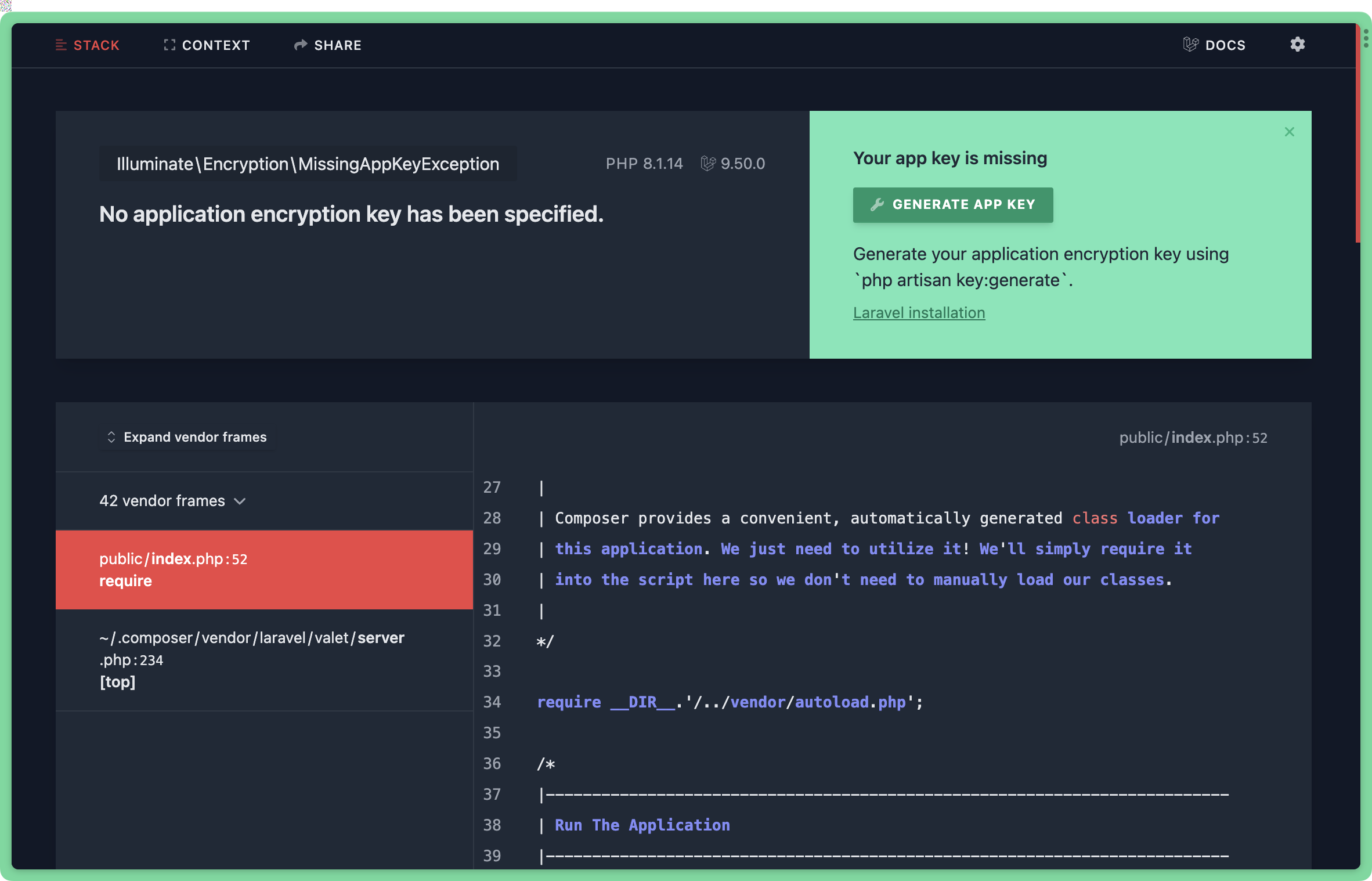

"Ignition" is the name of Laravel's error page. Besides showing you a nice stacktrace as well as some contextual information about your exceptions, it also has a really cool ability so register custom "solutions" for any error.

These solutions will be shown as a green card underneath the error itself. You might have seen this already, for example when trying to open a Laravel application that does not yet have an application key configured.

We can also provide our own custom solution. This works in two different ways.

- Let an exception provide it's own solution

This is for example useful if you are a package developer and want to enrich your package exceptions with custom solutions.

Unfortunately, this approach is not going to help us much, as we want to add our AI solutions to all sorts of exceptions - regardless if we have control over them or not.

The other way to add solutions to Ignition is via a so called SolutionProvider.

Let's start by creating a new solution provider in our own codebase. Inside app/Solutions, we'll create a new file called OpenAiSolutionProvider.php. This class should implement the Spatie\Ignition\Contracts\HasSolutionsForThrowable interface which makes us implement two methods:

namespace App\Solutions;

use Spatie\Ignition\Contracts\HasSolutionsForThrowable;

use Throwable;

class OpenAiSolutionProvider implements HasSolutionsForThrowable

{

public function canSolve(Throwable $throwable): bool

{

return true;

}

public function getSolutions(Throwable $throwable): array

{

return [new OpenAiSolution($throwable)];

}

}

The solution provider is responsible for two things:

We need to determine if we can provide a solution for a given throwable. As we strongly believe in the power of AI, we'll just always return true here.

For all throwables, the

getSolutionsmethod will be called. It expects us to return an array of 1...n solutions (that's because one provider could give multiple possible solutions for one single error).

In there we're going to return a new instance of an OpenAiSolution class - which we need to create next.

Inside app/Solutions we'll create a new file called OpenAiSolution.php. Similar to the solution provider, this one needs to implement the Solution interface, which gives the class all the required methods that will be needed to output the solution information:

namespace App\Solutions;

use Throwable;

use Spatie\Ignition\Contracts\Solution;

class OpenAiSolution implements Solution

{

public function __construct(protected Throwable $throwable)

{

}

public function getSolutionTitle(): string

{

// TODO: Implement getSolutionTitle() method.

}

public function getSolutionDescription(): string

{

// TODO: Implement getSolutionDescription() method.

}

public function getDocumentationLinks(): array

{

// TODO: Implement getDocumentationLinks() method.

}

}

How can we use the error information to query OpenAI?

Okay, so we now have a class that we can use to make our API request to OpenAI and then hopefully come up with a possible solution to our error.

Before taking a look at the actual implementation, let's think about how we can create a prompt that we will send to OpenAI in order to fix an error.

Let's imagine how a blade view needs to look like, that acts as the simplest prompt (a so called zero-shot prompt):

Fix the following error:

Exception Message: {!! $throwable->getMessage() !!}

Solution:

We would then pass the throwable to the template, send this to OpenAI and hope for the best.

While this might work for some specific errors, we are missing out on a lot of contextual information.

Things like:

Which file caused the error?

Which line number?

Which code snippet?

Improving the prompt

As mentioned above, this is a so-called "zero shot prompt". This means that we do not provide any examples to OpenAI to help the language model understand what kind of result we want to get generated. For this, let's create a "few shot prompt" instead.

Again - we do not need to worry about any implementation specific details - all we want is to create a prompt with as much information as needed to be helpful for the language model.

Language models get trained on text and code found on the internet and in books. This means that they also include usual mistakes and errors that we as humans make and write on the internet. So the results that OpenAI will generate, will also possibly include these errors.

We can improve this behavior by encouraging the AI that it is very good at its job. So lets start our prompt with something like this:

You are a very good PHP developer. Use the following context to find a possible fix for the exception message at the end.

…

Next up, we'll add one example error and an example solution:

File: /Users/marcelpociot/Code/ai-errors/app/Documentation.php

Exception: syntax error, unexpected token "{", expecting variable

Line: 193

Snippet including line numbers:

192 public static function getDocVersions(

193 {

194 return [

195 'master' => 'Master',

196 '9.x' => '9.x',

Possible Fix:

Line 192 has a syntax error (missing a closing parenthesis). The code should look like this:

```

public static function getDocVersions()

```

As you can see we provide an example with all the information that we also later on want to send with our actual error. In this case, I'm sending the filename, the exception message, the line number and a snippet including the line numbers.

The example solution then mentions the line number that contains the error, as well as a code block with a possible solution.

To get even better results, you can add more examples to your prompt. Just be aware that this is going to increase the amount of tokens that your prompt will consume.

After setting up your examples, we can now provide the variables for our actual error:

File: {!! $file !!}

Exception: {!! $exception !!}

Line: {!! $line !!}

Snippet including line numbers:

{!! $snippet !!}

Possible Fix:

The idea is that we keep the same format as in our example(s) above, and then stop after "Possible fix" in order to tell the AI model where to start generating the solution.

When we put all the individual pieces together, we end up with a prompt that looks like this:

You are a very good PHP developer. Use the following context to find a possible fix for the exception message at the end.

File: /Users/marcelpociot/Code/ai-errors/app/Documentation.php

Exception: syntax error, unexpected token "{", expecting variable

Line: 193

Snippet including line numbers:

192 public static function getDocVersions(

193 {

194 return [

195 'master' => 'Master',

196 '9.x' => '9.x',

Possible Fix:

Line 192 has a syntax error (missing a closing parenthesis). The code should look like this:

```

public static function getDocVersions()

```

File: {!! $file !!}

Exception: {!! $exception !!}

Line: {!! $line !!}

Snippet including line numbers:

{!! $snippet !!}

Possible Fix:

Of course we could also add even more data and pass the entire stacktrace (or the first X frames of it) to the prompt. But I wanted to keep the prompt shorter and therefore only want to look at the first frame of the stacktrace.

Gathering the required data

Now that we know what kind of data we want to send to OpenAI we can start implementing this and figure out which data we need to obtain from the throwable.

Let's start by creating a new method called generatePrompt:

protected function generatePrompt(Throwable $throwable): string

{

//

}

As mentioned above, we need to pass the code snippet of the first frame from our stacktrace. Depending on the error you get, this will often result in errors coming from the Laravel framework/vendor directory instead of actual errors in your own codebase. While this is more of a design decision, I am going to focus on errors that happen within my own codebase and therefore only take the first frame of the stacktrace thats actually coming from my application - not the vendor folder.

To retrieve the frame from my own application, we can make use of the Backtrace class ships with Ignition. Let's add a new method called getApplicationFrame

protected function getApplicationFrame(Throwable $throwable): ?Frame

{

$backtrace = Backtrace::createForThrowable($throwable)->applicationPath(base_path());

$frames = $backtrace->frames();

return $frames[$backtrace->firstApplicationFrameIndex()] ?? null;

}

In this method we are creating a backtrace for the given throwable. By passing our application path, we can then retrieve the first application frame out of all available frames. If the error only occurs within the vendor folder, we are going to return null instead.

We can now make use of this method inside of generatePrompt.

protected function generatePrompt(Throwable $throwable): string

{

$applicationFrame = $this->getApplicationFrame($throwable);

$snippet = $applicationFrame->getSnippet(15);

return (string)view('prompts.solution', [

'snippet' => collect($snippet)->map(fn ($line, $number) => $number .' '.$line)->join(PHP_EOL),

'file' => $applicationFrame->file,

'line' => $applicationFrame->lineNumber,

'exception' => $throwable->getMessage(),

]);

}

In order to generate the prompt, we now retrieve the first application frame from our throwable and then get 15 lines of the code snippet surrounding the line where the exception occurred. We then pass all the information we need to a new view called prompts.solution.

This is the prompt above, just wrapped inside a blade view at resources/views/prompts/solution.blade.php.

Making the API call

With our prompt generation logic in place, we now need to make the actual API call to OpenAI.

We can add the API call and prompt generation to our solution constructor like this:

class OpenAiSolution implements Solution

{

protected string $solution;

public function __construct(protected Throwable $throwable)

{

$this->solution = Cache::remember('solution-'.sha1($this->throwable->getTraceAsString()), now()->addHour(), fn () =>

OpenAI::completions()->create([

'model' => 'text-davinci-003',

'prompt' => $this->generatePrompt($this->throwable),

'max_tokens' => 100,

'temperature' => 0,

])->choices[0]->text

);

}

// ...

}

We store the generated text from OpenAI in a property. I also added a caching mechanism to avoid sending unnecessary OpenAI API requests. Like this, we will not send the exact same error twice.

With the solution stored within the object, we can now return it in the methods that we initially stubbed out from the Solution interface.

public function getSolutionTitle(): string

{

return 'AI Generated Solution';

}

public function getSolutionDescription(): string

{

return $this->solution;

}

public function getDocumentationLinks(): array

{

return [];

}

And that's it! Now our solution class is ready to go, so let's register our solution provider.

Registering the solution provider

Inside of our AppServiceProvider (or any service provider) we can now register this new solution provider class like this:

use Spatie\Ignition\Contracts\SolutionProviderRepository;

class AppServiceProvider extends ServiceProvider

{

public function register()

{

app(SolutionProviderRepository::class)

->registerSolutionProvider(OpenAiSolutionProvider::class);

}

}

This will tell Ignition that it should also ask our new solution provider for possible solutions.

Testing it

Now for the fun part! Let's create some errors!

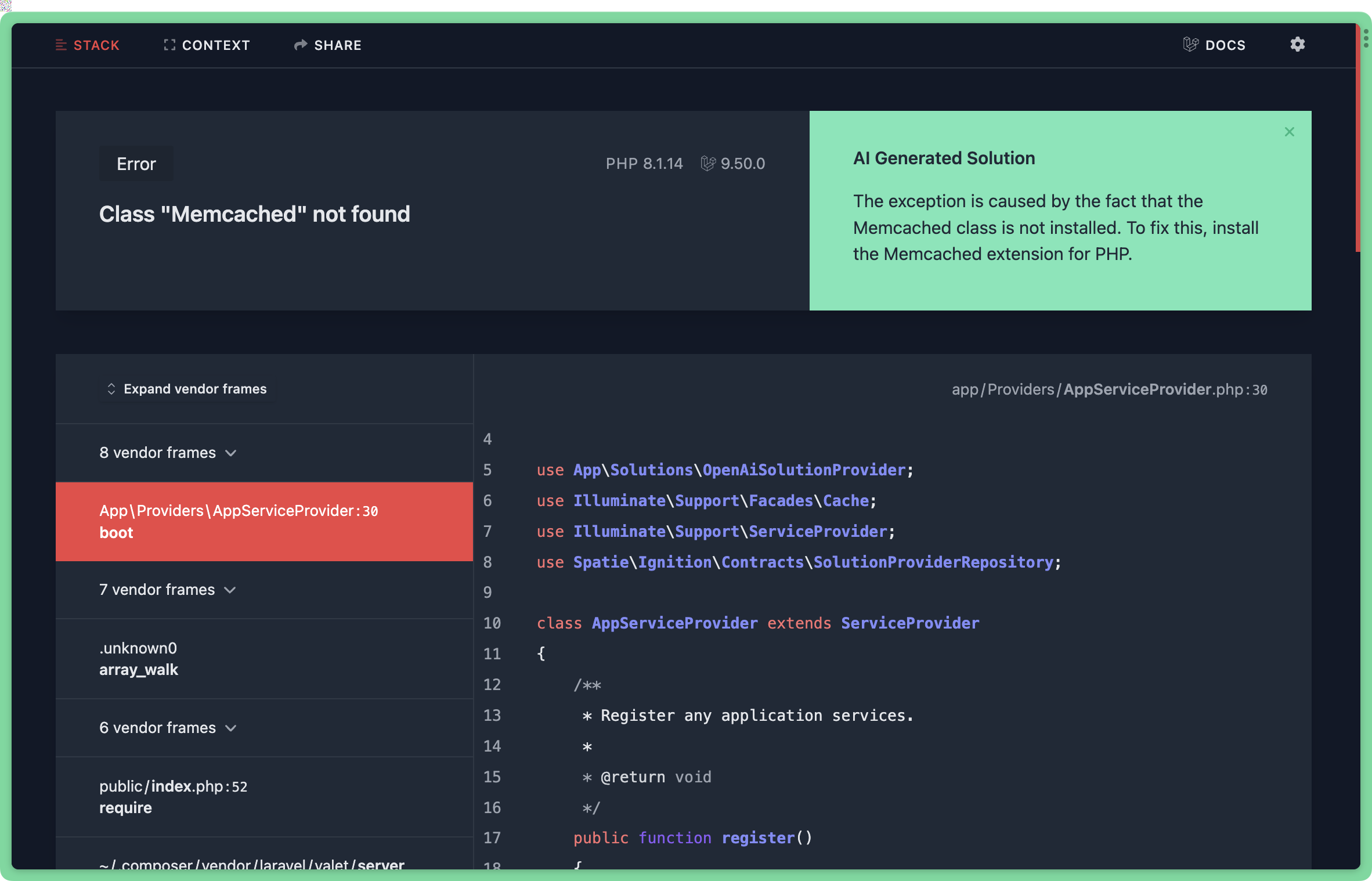

For example, you could try and retrieve something from a non-existent cache implementation:

Cache::store('memcached')->get('something');

I don't have the Memcached PHP extension installed locally. If I now refresh my browser, this is what I get:

Pretty cool, huh? The solution tells me that I need to install the Memcached extension, which is totally right!

Go ahead and try it out with your own exceptions and errors.

Where to go from here?

Of course this is a simplified approach for coming up for solutions to exceptions. This could be additionally improved by providing more context, like bigger chunks/entire methods of the affected code snippets. But I think it's a great starting point - especially if you haven't looked at prompt engineering for GPT-3 yet!

We are currently working on a super exciting PHP-based AI package that will make working with a lot of common AI use cases a lot easier, so make sure to subscribe to our newsletter to stay up-to-date.

Source: