Table of Contents

Overview

The goal of this hands-on lab is for you to turn code that you have developed into a replicated application running on Kubernetes, which is running on Kubernetes Engine. For this lab the code will be a simple Hello World node.js app.

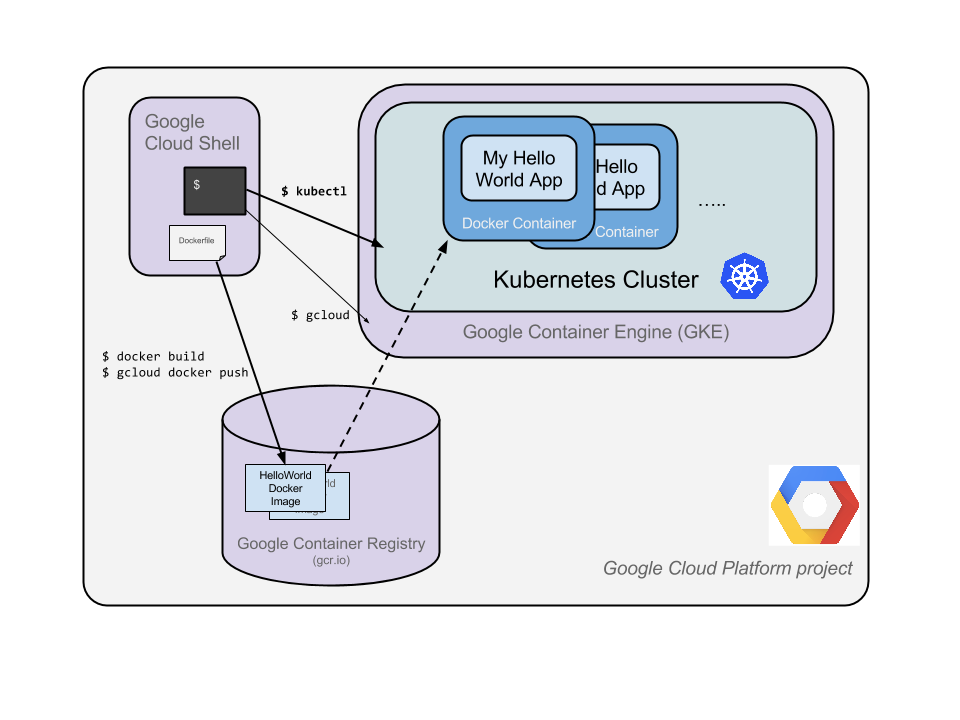

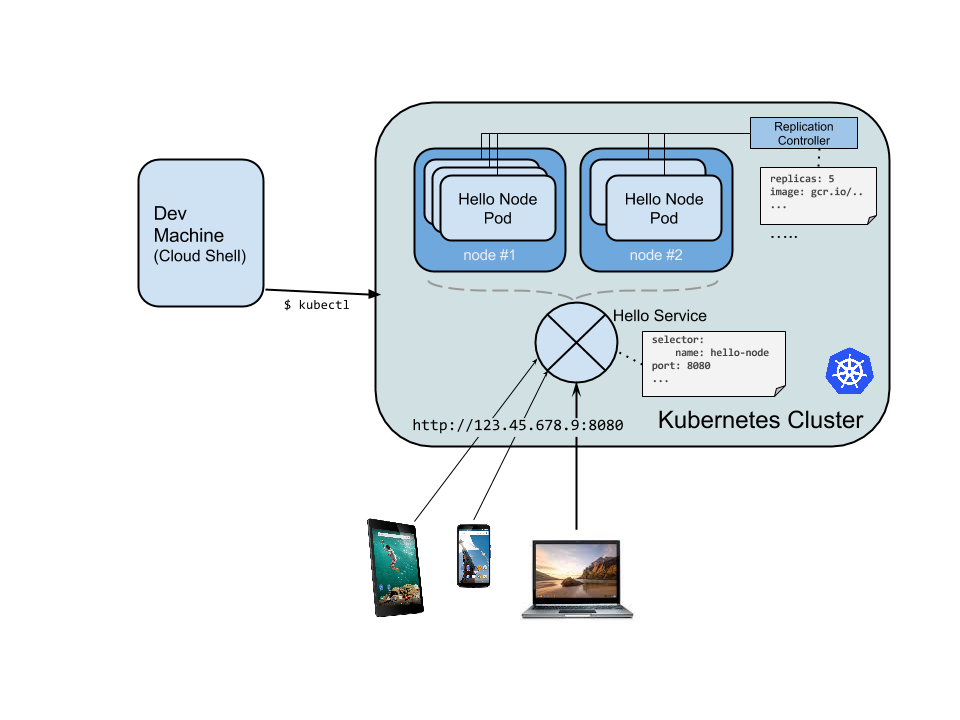

Here's a diagram of the various parts in play in this lab, to help you understand how the pieces fit together with one another. Use this as a reference as you progress through the lab; it should all make sense by the time you get to the end (but feel free to ignore this for now).

Kubernetes is an open source project (available on kubernetes.io) which can run on many different environments, from laptops to high-availability multi-node clusters; from public clouds to on-premise deployments; from virtual machines to bare metal.

For the purpose of this lab, using a managed environment such as Kubernetes Engine (a Google-hosted version of Kubernetes running on Compute Engine) will allow you to focus more on experiencing Kubernetes rather than setting up the underlying infrastructure.

What you'll learn

Create a Node.js server.

Create a Docker container image.

Create a container cluster.

Create a Kubernetes pod.

Scale up your services.

Prerequisites

- Familiarity with standard Linux text editors such as

vim,emacs, ornanowill be helpful.

Students are to type the commands themselves, to help encourage learning of the core concepts. Many labs will include a code block that contains the required commands. You can easily copy and paste the commands from the code block into the appropriate places during the lab.

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources are made available to you.

This hands-on lab lets you do the lab activities in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

Note: Use an Incognito (recommended) or private browser window to run this lab. This prevents conflicts between your personal account and the student account, which may cause extra charges incurred to your personal account.

- Time to complete the lab—remember, once you start, you cannot pause a lab.

Note: Use only the student account for this lab. If you use a different Google Cloud account, you may incur charges to that account.

How to start your lab and sign in to the Google Cloud console

Click the Start Lab button. If you need to pay for the lab, a dialog opens for you to select your payment method. On the left is the Lab Details pane with the following:

The Open Google Cloud console button

Time remaining

The temporary credentials that you must use for this lab

Other information, if needed, to step through this lab

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account.

If necessary, copy the Username below and paste it into the Sign in dialog.

student-03-d9dead68b660@qwiklabs.netYou can also find the Username in the Lab Details pane.

Click Next.

Copy the Password below and paste it into the Welcome dialog.

vyJpF8vxnTXfYou can also find the Password in the Lab Details pane.

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials.

Note: Using your own Google Cloud account for this lab may incur extra charges.

Click through the subsequent pages:

Accept the terms and conditions.

Do not add recovery options or two-factor authentication (because this is a temporary account).

Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Note: To access Google Cloud products and services, click the Navigation menu or type the service or product name in the Search field.

Activate Cloud Shell

Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources.

Click Activate Cloud Shell at the top of the Google Cloud console.

Click through the following windows:

Continue through the Cloud Shell information window.

Authorize Cloud Shell to use your credentials to make Google Cloud API calls.

When you are connected, you are already authenticated, and the project is set to your Project_ID, qwiklabs-gcp-04-70fa585adb86. The output contains a line that declares the Project_ID for this session:

Your Cloud Platform project in this session is set to qwiklabs-gcp-04-70fa585adb86

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- (Optional) You can list the active account name with this command:

gcloud auth list

- Click Authorize.

Output:

ACTIVE: *

ACCOUNT: student-03-d9dead68b660@qwiklabs.net

To set the active account, run:

$ gcloud config set account `ACCOUNT`

- (Optional) You can list the project ID with this command:

gcloud config list project

Output:

[core]

project = qwiklabs-gcp-04-70fa585adb86

Note: For full documentation of gcloud, in Google Cloud, refer to the gcloud CLI overview guide.

Task 1. Create your Node.js application

1.Using Cloud Shell, write a simple Node.js server that you'll deploy to Kubernetes Engine:

vi server.js

- Start the editor:

i

- Add this content to the file:

var http = require('http');

var handleRequest = function(request, response) {

response.writeHead(200);

response.end("Hello World!");

}

var www = http.createServer(handleRequest);

www.listen(8080);

Note: vi is used here, but nano and emacs are also available in Cloud Shell. You can also use the Web-editor feature of CloudShell as described in the How Cloud Shell works guide.

- Save the

server.jsfile by pressing Esc then:

:wq

- Since Cloud Shell has the

nodeexecutable installed, run this command to start the node server (the command produces no output):

node server.js

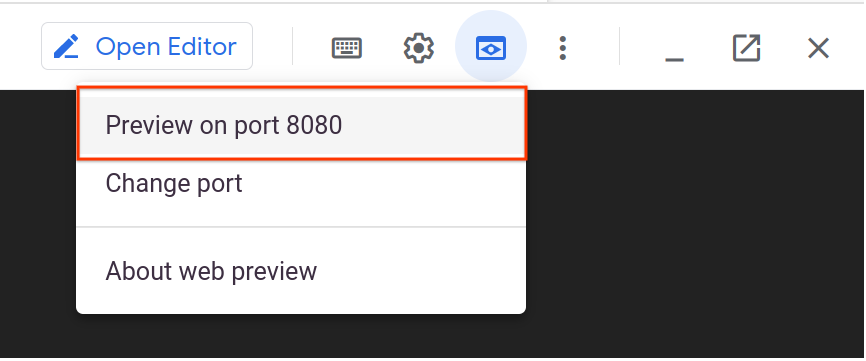

- Use the built-in Web preview feature of Cloud Shell to open a new browser tab and proxy a request to the instance you just started on port

8080.

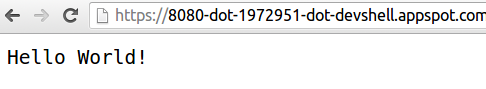

A new browser tab will open to display your results:

- Before continuing, return to Cloud Shell and type CTRL+C to stop the running node server.

Next you will package this application in a Docker container.

Task 2. Create a Docker container image

- Next, create a

Dockerfilethat describes the image you want to build. Docker container images can extend from other existing images, so for this image, we'll extend from an existing Node image:

vi Dockerfile

- Start the editor:

i

- Add this content:

FROM node:6.9.2

EXPOSE 8080

COPY server.js .

CMD ["node", "server.js"]

This "recipe" for the Docker image will:

Start from the

nodeimage found on the Docker hub.Expose port

8080.Copy your

server.jsfile to the image.Start the node server as we previously did manually.

- Save this

Dockerfileby pressing ESC, then type:

:wq

- Build the image with the following:

docker build -t hello-node:v1 .

It'll take some time to download and extract everything, but you can see the progress bars as the image builds.

Once complete, test the image locally by running a Docker container as a daemon on port 8080 from your newly-created container image.

- Run the Docker container with this command:

docker run -d -p 8080:8080 hello-node:v1

Your output should look something like this:

325301e6b2bffd1d0049c621866831316d653c0b25a496d04ce0ec6854cb7998

- To see your results, use the web preview feature of Cloud Shell. Alternatively use

curlfrom your Cloud Shell prompt:

curl http://localhost:8080

This is the output you should see:

Hello World!

Note: Full documentation for the docker run command can be found in the Docker run reference.

Next, stop the running container.

- Find your Docker container ID by running:

docker ps

Your output you should look like this:

CONTAINER ID IMAGE COMMAND

2c66d0efcbd4 hello-node:v1 "/bin/sh -c 'node

- Stop the container by running the following, replacing the

[CONTAINER ID]with the value provided from the previous step:

docker stop [CONTAINER ID]

Your console output should resemble the following (your container ID):

2c66d0efcbd4

Now that the image is working as intended, push it to the Google Artifact Registry, a private repository for your Docker images, accessible from your Google Cloud projects.

- First you need to create a repository in Artifact Registry. Let's call it

my-docker-repo. Run the following command:

gcloud artifacts repositories create my-docker-repo \

--repository-format=docker \

--location=us-west1 \

--project=qwiklabs-gcp-04-70fa585adb86

- Now run the following command to configure docker authentication.

gcloud auth configure-docker

If Prompted, Do you want to continue (Y/n)?. Enter Y.

- To tag your image with the repository name, run this command, replacing

PROJECT_IDwith your Project ID, found in the Console or the Lab Details section of the lab:

docker tag hello-node:v1 us-west1-docker.pkg.dev/qwiklabs-gcp-04-70fa585adb86/my-docker-repo/hello-node:v1

- And push your container image to the repository by running the following command:

docker push us-west1-docker.pkg.dev/qwiklabs-gcp-04-70fa585adb86/my-docker-repo/hello-node:v1

The initial push may take a few minutes to complete. You'll see the progress bars as it builds.

The push refers to a repository [pkg.dev/qwiklabs-gcp-6h281a111f098/hello-node]

ba6ca48af64e: Pushed

381c97ba7dc3: Pushed

604c78617f34: Pushed

fa18e5ffd316: Pushed

0a5e2b2ddeaa: Pushed

53c779688d06: Pushed

60a0858edcd5: Pushed

b6ca02dfe5e6: Pushed

v1: digest: sha256:8a9349a355c8e06a48a1e8906652b9259bba6d594097f115060acca8e3e941a2 size: 2002

- The container image will be listed in your Console. Click Navigation menu > Artifact Registry.

Now you have a project-wide Docker image available which Kubernetes can access and orchestrate.

Note: We used the recommended way of working with Artifact Registry, which is specific about which region to use. To learn more, refer to Pushing and pulling from Artifact Registry.

Task 3. Create your cluster

Now you're ready to create your Kubernetes Engine cluster. A cluster consists of a Kubernetes master API server hosted by Google and a set of worker nodes. The worker nodes are Compute Engine virtual machines.

- Make sure you have set your project using

gcloud(replacePROJECT_IDwith your Project ID, found in the console and in the Lab Details section of the lab):

gcloud config set project PROJECT_ID

- Create a cluster with two e2-medium nodes (this will take a few minutes to complete):

gcloud container clusters create hello-world \

--num-nodes 2 \

--machine-type e2-medium \

--zone "us-west1-a"

You can safely ignore warnings that come up when the cluster builds.

The console output should look like this:

Creating cluster hello-world...done.

Created [https://container.googleapis.com/v1/projects/PROJECT_ID/zones/"us-west1-a"/clusters/hello-world].

kubeconfig entry generated for hello-world.

NAME ZONE MASTER_VERSION MASTER_IP MACHINE_TYPE STATUS

hello-world "us-west1-a" 1.5.7 146.148.46.124 e2-medium RUNNING

Alternatively, you can create this cluster through the Console by opening the Navigation menu and selecting Kubernetes Engine > Kubernetes clusters > Create.

Note: It is recommended to create the cluster in the same zone as the storage bucket used by the artifact registry (see previous step).

If you select Navigation menu > Kubernetes Engine, you'll see that you have a fully-functioning Kubernetes cluster powered by Kubernetes Engine.

It's time to deploy your own containerized application to the Kubernetes cluster! From now on you'll use the kubectl command line (already set up in your Cloud Shell environment).

Click Check my progress below to check your lab progress.

Create your cluster.

Task 4. Create your pod

A Kubernetes pod is a group of containers tied together for administration and networking purposes. It can contain single or multiple containers. Here you'll use one container built with your Node.js image stored in your private artifact registry. It will serve content on port 8080.

- Create a pod with the

kubectl runcommand (replacePROJECT_IDwith your Project ID, found in the console and in the Connection Details section of the lab):

kubectl create deployment hello-node \

--image=us-west1-docker.pkg.dev/PROJECT_ID/my-docker-repo/hello-node:v1

Output:

deployment.apps/hello-node created

As you can see, you've created a deployment object. Deployments are the recommended way to create and scale pods. Here, a new deployment manages a single pod replica running the hello-node:v1 image.

- To view the deployment, run:

kubectl get deployments

Output:

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 1/1 1 1 1m36s

- To view the pod created by the deployment, run:

kubectl get pods

Output:

NAME READY STATUS RESTARTS AGE

hello-node-714049816-ztzrb 1/1 Running 0 6m

Now is a good time to go through some interesting kubectl commands. None of these will change the state of the cluster. To view the full reference documentation, refer to Command line tool (kubectl):

kubectl cluster-info

kubectl config view

And for troubleshooting :

kubectl get events

kubectl logs <pod-name>

You now need to make your pod accessible to the outside world.

Click Check my progress below to check your lab progress.

Create your pod

Task 5. Allow external traffic

By default, the pod is only accessible by its internal IP within the cluster. In order to make the hello-node container accessible from outside the Kubernetes virtual network, you have to expose the pod as a Kubernetes service.

- From Cloud Shell you can expose the pod to the public internet with the

kubectl exposecommand combined with the--type="LoadBalancer"flag. This flag is required for the creation of an externally accessible IP:

kubectl expose deployment hello-node --type="LoadBalancer" --port=8080

Output:

service/hello-node exposed

The flag used in this command specifies that it is using the load-balancer provided by the underlying infrastructure (in this case the Compute Engine load balancer). Note that you expose the deployment, and not the pod, directly. This will cause the resulting service to load balance traffic across all pods managed by the deployment (in this case only 1 pod, but you will add more replicas later).

The Kubernetes master creates the load balancer and related Compute Engine forwarding rules, target pools, and firewall rules to make the service fully accessible from outside of Google Cloud.

- To find the publicly-accessible IP address of the service, request

kubectlto list all the cluster services:

kubectl get services

This is the output you should see:

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-node 10.3.250.149 104.154.90.147 8080/TCP 1m

kubernetes 10.3.240.1 < none > 443/TCP 5m

There are 2 IP addresses listed for your hello-node service, both serving port 8080. The CLUSTER-IP is the internal IP that is only visible inside your cloud virtual network; the EXTERNAL-IP is the external load-balanced IP.

Note: The EXTERNAL-IP may take several minutes to become available and visible. If the EXTERNAL-IP is missing, wait a few minutes and run the command again.

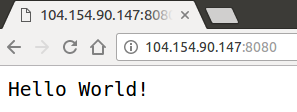

- You should now be able to reach the service by pointing your browser to this address:

http://<EXTERNAL_IP>:8080

At this point you've gained several features from moving to containers and Kubernetes - you do not need to specify on which host to run your workload and you also benefit from service monitoring and restart. Now see what else can be gained from your new Kubernetes infrastructure.

Click Check my progress below to check your lab progress.

Create a Kubernetes Service

Task 6. Scale up your service

One of the powerful features offered by Kubernetes is how easy it is to scale your application. Suppose you suddenly need more capacity. You can tell the replication controller to manage a new number of replicas for your pod.

- Set the number of replicas for your pod:

kubectl scale deployment hello-node --replicas=4

Output:

deployment.extensions/hello-node scaled

- Request a description of the updated deployment:

kubectl get deployment

Output:

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 4/4 4 4 16m

Re-run the above command until you see all 4 replicas created.

- List all the pods:

kubectl get pods

This is the output you should see:

NAME READY STATUS RESTARTS AGE

hello-node-714049816-g4azy 1/1 Running 0 1m

hello-node-714049816-rk0u6 1/1 Running 0 1m

hello-node-714049816-sh812 1/1 Running 0 1m

hello-node-714049816-ztzrb 1/1 Running 0 16m

A declarative approach is being used here. Rather than starting or stopping new instances, you declare how many instances should be running at all times. Kubernetes reconciliation loops make sure that reality matches what you requested and takes action if needed.

Here's a diagram summarizing the state of your Kubernetes cluster:

Click Check my progress below to check your lab progress.

Scale up your service

Task 7. Roll out an upgrade to your service

At some point the application that you've deployed to production will require bug fixes or additional features. Kubernetes helps you deploy a new version to production without impacting your users.

- First, modify the application by opening

server.js:

vi server.js

i

- Then update the response message:

response.end("Hello Kubernetes World!");

- Save the

server.jsfile by pressing Esc then:

:wq

Now you can build and publish a new container image to the registry with an incremented tag (v2 in this case).

- Run the following commands, replacing

PROJECT_IDwith your lab project ID:

docker build -t hello-node:v2 .

docker tag hello-node:v2 {{{ project_0.default_region | YOUR_REGION }}}-docker.pkg.dev/{{{ project_0.project_id | YOUR_PROJECT_ID }}}/my-docker-repo/hello-node:v2

docker push {{{ project_0.default_region | YOUR_REGION }}}-docker.pkg.dev/{{{ project_0.project_id | YOUR_PROJECT_ID }}}/my-docker-repo/hello-node:v2

Note: Building and pushing this updated image should be quicker since caching is being taken advantage of.

Kubernetes will smoothly update your replication controller to the new version of the application. In order to change the image label for your running container, you will edit the existing hello-node deployment and change the image from pkg.dev/PROJECT_ID/hello-node:v1 to pkg.dev/PROJECT_ID/hello-node:v2.

- To do this, use the

kubectl editcommand:

kubectl edit deployment hello-node

It opens a text editor displaying the full deployment yaml configuration. It isn't necessary to understand the full yaml config right now, just understand that by updating the spec.template.spec.containers.image field in the config you are telling the deployment to update the pods with the new image.

- Look for

Spec>containers>imageand change the version number from v1 to v2:

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: 2016-03-24T17:55:28Z

generation: 3

labels:

run: hello-node

name: hello-node

namespace: default

resourceVersion: "151017"

selfLink: /apis/extensions/v1beta1/namespaces/default/deployments/hello-node

uid: 981fe302-f1e9-11e5-9a78-42010af00005

spec:

replicas: 4

selector:

matchLabels:

run: hello-node

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

run: hello-node

spec:

containers:

- image: pkg.dev/PROJECT_ID/hello-node:v1 ## Update this line ##

imagePullPolicy: IfNotPresent

name: hello-node

ports:

- containerPort: 8080

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

dnsPolicy: ClusterFirst

restartPolicy: Always

securityContext: {}

terminationGracePeriodSeconds: 30

- After making the change, save and close this file: Press ESC, then:

:wq

This is the output you should see:

deployment.extensions/hello-node edited

- Run the following to update the deployment with the new image:

kubectl get deployments

New pods will be created with the new image and the old pods will be deleted.

This is the output you should see (you may need to rerun the above command to see the following):

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 4/4 4 4 1h

While this is happening, the users of your services shouldn't see any interruption. After a little while they'll start accessing the new version of your application. You can find more details on rolling updates in the Performing a Rolling Update documentation.

Hopefully with these deployment, scaling, and updated features, once you've set up your Kubernetes Engine cluster, you'll agree that Kubernetes will help you focus on the application rather than the infrastructure.

ConOverview

The goal of this hands-on lab is for you to turn code that you have developed into a replicated application running on Kubernetes, which is running on Kubernetes Engine. For this lab the code will be a simple Hello World node.js app.

Here's a diagram of the various parts in play in this lab, to help you understand how the pieces fit together with one another. Use this as a reference as you progress through the lab; it should all make sense by the time you get to the end (but feel free to ignore this for now).

Kubernetes is an open source project (available on kubernetes.io) which can run on many different environments, from laptops to high-availability multi-node clusters; from public clouds to on-premise deployments; from virtual machines to bare metal.

For the purpose of this lab, using a managed environment such as Kubernetes Engine (a Google-hosted version of Kubernetes running on Compute Engine) will allow you to focus more on experiencing Kubernetes rather than setting up the underlying infrastructure.

What you'll learn

Create a Node.js server.

Create a Docker container image.

Create a container cluster.

Create a Kubernetes pod.

Scale up your services.

Prerequisites

- Familiarity with standard Linux text editors such as

vim,emacs, ornanowill be helpful.

Students are to type the commands themselves, to help encourage learning of the core concepts. Many labs will include a code block that contains the required commands. You can easily copy and paste the commands from the code block into the appropriate places during the lab.

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources are made available to you.

This hands-on lab lets you do the lab activities in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

Note: Use an Incognito (recommended) or private browser window to run this lab. This prevents conflicts between your personal account and the student account, which may cause extra charges incurred to your personal account.

- Time to complete the lab—remember, once you start, you cannot pause a lab.

Note: Use only the student account for this lab. If you use a different Google Cloud account, you may incur charges to that account.

How to start your lab and sign in to the Google Cloud console

Click the Start Lab button. If you need to pay for the lab, a dialog opens for you to select your payment method. On the left is the Lab Details pane with the following:

The Open Google Cloud console button

Time remaining

The temporary credentials that you must use for this lab

Other information, if needed, to step through this lab

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account.

If necessary, copy the Username below and paste it into the Sign in dialog.

student-03-d9dead68b660@qwiklabs.netYou can also find the Username in the Lab Details pane.

Click Next.

Copy the Password below and paste it into the Welcome dialog.

vyJpF8vxnTXfYou can also find the Password in the Lab Details pane.

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials.

Note: Using your own Google Cloud account for this lab may incur extra charges.

Click through the subsequent pages:

Accept the terms and conditions.

Do not add recovery options or two-factor authentication (because this is a temporary account).

Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Note: To access Google Cloud products and services, click the Navigation menu or type the service or product name in the Search field.

Activate Cloud Shell

Cloud Shell is a virtual machine that is loaded with development tools. It offers a persistent 5GB home directory and runs on the Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources.

Click Activate Cloud Shell at the top of the Google Cloud console.

Click through the following windows:

Continue through the Cloud Shell information window.

Authorize Cloud Shell to use your credentials to make Google Cloud API calls.

When you are connected, you are already authenticated, and the project is set to your Project_ID, qwiklabs-gcp-04-70fa585adb86. The output contains a line that declares the Project_ID for this session:

Your Cloud Platform project in this session is set to qwiklabs-gcp-04-70fa585adb86

gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab-completion.

- (Optional) You can list the active account name with this command:

gcloud auth list

- Click Authorize.

Output:

ACTIVE: *

ACCOUNT: student-03-d9dead68b660@qwiklabs.net

To set the active account, run:

$ gcloud config set account `ACCOUNT`

- (Optional) You can list the project ID with this command:

gcloud config list project

Output:

[core]

project = qwiklabs-gcp-04-70fa585adb86

Note: For full documentation of gcloud, in Google Cloud, refer to the gcloud CLI overview guide.

Task 1. Create your Node.js application

1.Using Cloud Shell, write a simple Node.js server that you'll deploy to Kubernetes Engine:

vi server.js

- Start the editor:

i

- Add this content to the file:

var http = require('http');

var handleRequest = function(request, response) {

response.writeHead(200);

response.end("Hello World!");

}

var www = http.createServer(handleRequest);

www.listen(8080);

Note: vi is used here, but nano and emacs are also available in Cloud Shell. You can also use the Web-editor feature of CloudShell as described in the How Cloud Shell works guide.

- Save the

server.jsfile by pressing Esc then:

:wq

- Since Cloud Shell has the

nodeexecutable installed, run this command to start the node server (the command produces no output):

node server.js

- Use the built-in Web preview feature of Cloud Shell to open a new browser tab and proxy a request to the instance you just started on port

8080.

A new browser tab will open to display your results:

- Before continuing, return to Cloud Shell and type CTRL+C to stop the running node server.

Next you will package this application in a Docker container.

Task 2. Create a Docker container image

- Next, create a

Dockerfilethat describes the image you want to build. Docker container images can extend from other existing images, so for this image, we'll extend from an existing Node image:

vi Dockerfile

- Start the editor:

i

- Add this content:

FROM node:6.9.2

EXPOSE 8080

COPY server.js .

CMD ["node", "server.js"]

This "recipe" for the Docker image will:

Start from the

nodeimage found on the Docker hub.Expose port

8080.Copy your

server.jsfile to the image.Start the node server as we previously did manually.

- Save this

Dockerfileby pressing ESC, then type:

:wq

- Build the image with the following:

docker build -t hello-node:v1 .

It'll take some time to download and extract everything, but you can see the progress bars as the image builds.

Once complete, test the image locally by running a Docker container as a daemon on port 8080 from your newly-created container image.

- Run the Docker container with this command:

docker run -d -p 8080:8080 hello-node:v1

Your output should look something like this:

325301e6b2bffd1d0049c621866831316d653c0b25a496d04ce0ec6854cb7998

- To see your results, use the web preview feature of Cloud Shell. Alternatively use

curlfrom your Cloud Shell prompt:

curl http://localhost:8080

This is the output you should see:

Hello World!

Note: Full documentation for the docker run command can be found in the Docker run reference.

Next, stop the running container.

- Find your Docker container ID by running:

docker ps

Your output you should look like this:

CONTAINER ID IMAGE COMMAND

2c66d0efcbd4 hello-node:v1 "/bin/sh -c 'node

- Stop the container by running the following, replacing the

[CONTAINER ID]with the value provided from the previous step:

docker stop [CONTAINER ID]

Your console output should resemble the following (your container ID):

2c66d0efcbd4

Now that the image is working as intended, push it to the Google Artifact Registry, a private repository for your Docker images, accessible from your Google Cloud projects.

- First you need to create a repository in Artifact Registry. Let's call it

my-docker-repo. Run the following command:

gcloud artifacts repositories create my-docker-repo \

--repository-format=docker \

--location=us-west1 \

--project=qwiklabs-gcp-04-70fa585adb86

- Now run the following command to configure docker authentication.

gcloud auth configure-docker

If Prompted, Do you want to continue (Y/n)?. Enter Y.

- To tag your image with the repository name, run this command, replacing

PROJECT_IDwith your Project ID, found in the Console or the Lab Details section of the lab:

docker tag hello-node:v1 us-west1-docker.pkg.dev/qwiklabs-gcp-04-70fa585adb86/my-docker-repo/hello-node:v1

- And push your container image to the repository by running the following command:

docker push us-west1-docker.pkg.dev/qwiklabs-gcp-04-70fa585adb86/my-docker-repo/hello-node:v1

The initial push may take a few minutes to complete. You'll see the progress bars as it builds.

The push refers to a repository [pkg.dev/qwiklabs-gcp-6h281a111f098/hello-node]

ba6ca48af64e: Pushed

381c97ba7dc3: Pushed

604c78617f34: Pushed

fa18e5ffd316: Pushed

0a5e2b2ddeaa: Pushed

53c779688d06: Pushed

60a0858edcd5: Pushed

b6ca02dfe5e6: Pushed

v1: digest: sha256:8a9349a355c8e06a48a1e8906652b9259bba6d594097f115060acca8e3e941a2 size: 2002

- The container image will be listed in your Console. Click Navigation menu > Artifact Registry.

Now you have a project-wide Docker image available which Kubernetes can access and orchestrate.

Note: We used the recommended way of working with Artifact Registry, which is specific about which region to use. To learn more, refer to Pushing and pulling from Artifact Registry.

Task 3. Create your cluster

Now you're ready to create your Kubernetes Engine cluster. A cluster consists of a Kubernetes master API server hosted by Google and a set of worker nodes. The worker nodes are Compute Engine virtual machines.

- Make sure you have set your project using

gcloud(replacePROJECT_IDwith your Project ID, found in the console and in the Lab Details section of the lab):

gcloud config set project PROJECT_ID

- Create a cluster with two e2-medium nodes (this will take a few minutes to complete):

gcloud container clusters create hello-world \

--num-nodes 2 \

--machine-type e2-medium \

--zone "us-west1-a"

You can safely ignore warnings that come up when the cluster builds.

The console output should look like this:

Creating cluster hello-world...done.

Created [https://container.googleapis.com/v1/projects/PROJECT_ID/zones/"us-west1-a"/clusters/hello-world].

kubeconfig entry generated for hello-world.

NAME ZONE MASTER_VERSION MASTER_IP MACHINE_TYPE STATUS

hello-world "us-west1-a" 1.5.7 146.148.46.124 e2-medium RUNNING

Alternatively, you can create this cluster through the Console by opening the Navigation menu and selecting Kubernetes Engine > Kubernetes clusters > Create.

Note: It is recommended to create the cluster in the same zone as the storage bucket used by the artifact registry (see previous step).

If you select Navigation menu > Kubernetes Engine, you'll see that you have a fully-functioning Kubernetes cluster powered by Kubernetes Engine.

It's time to deploy your own containerized application to the Kubernetes cluster! From now on you'll use the kubectl command line (already set up in your Cloud Shell environment).

Click Check my progress below to check your lab progress.

Create your cluster.

Task 4. Create your pod

A Kubernetes pod is a group of containers tied together for administration and networking purposes. It can contain single or multiple containers. Here you'll use one container built with your Node.js image stored in your private artifact registry. It will serve content on port 8080.

- Create a pod with the

kubectl runcommand (replacePROJECT_IDwith your Project ID, found in the console and in the Connection Details section of the lab):

kubectl create deployment hello-node \

--image=us-west1-docker.pkg.dev/PROJECT_ID/my-docker-repo/hello-node:v1

Output:

deployment.apps/hello-node created

As you can see, you've created a deployment object. Deployments are the recommended way to create and scale pods. Here, a new deployment manages a single pod replica running the hello-node:v1 image.

- To view the deployment, run:

kubectl get deployments

Output:

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 1/1 1 1 1m36s

- To view the pod created by the deployment, run:

kubectl get pods

Output:

NAME READY STATUS RESTARTS AGE

hello-node-714049816-ztzrb 1/1 Running 0 6m

Now is a good time to go through some interesting kubectl commands. None of these will change the state of the cluster. To view the full reference documentation, refer to Command line tool (kubectl):

kubectl cluster-info

kubectl config view

And for troubleshooting :

kubectl get events

kubectl logs <pod-name>

You now need to make your pod accessible to the outside world.

Click Check my progress below to check your lab progress.

Create your pod

Task 5. Allow external traffic

By default, the pod is only accessible by its internal IP within the cluster. In order to make the hello-node container accessible from outside the Kubernetes virtual network, you have to expose the pod as a Kubernetes service.

- From Cloud Shell you can expose the pod to the public internet with the

kubectl exposecommand combined with the--type="LoadBalancer"flag. This flag is required for the creation of an externally accessible IP:

kubectl expose deployment hello-node --type="LoadBalancer" --port=8080

Output:

service/hello-node exposed

The flag used in this command specifies that it is using the load-balancer provided by the underlying infrastructure (in this case the Compute Engine load balancer). Note that you expose the deployment, and not the pod, directly. This will cause the resulting service to load balance traffic across all pods managed by the deployment (in this case only 1 pod, but you will add more replicas later).

The Kubernetes master creates the load balancer and related Compute Engine forwarding rules, target pools, and firewall rules to make the service fully accessible from outside of Google Cloud.

- To find the publicly-accessible IP address of the service, request

kubectlto list all the cluster services:

kubectl get services

This is the output you should see:

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hello-node 10.3.250.149 104.154.90.147 8080/TCP 1m

kubernetes 10.3.240.1 < none > 443/TCP 5m

There are 2 IP addresses listed for your hello-node service, both serving port 8080. The CLUSTER-IP is the internal IP that is only visible inside your cloud virtual network; the EXTERNAL-IP is the external load-balanced IP.

Note: The EXTERNAL-IP may take several minutes to become available and visible. If the EXTERNAL-IP is missing, wait a few minutes and run the command again.

- You should now be able to reach the service by pointing your browser to this address:

http://<EXTERNAL_IP>:8080

At this point you've gained several features from moving to containers and Kubernetes - you do not need to specify on which host to run your workload and you also benefit from service monitoring and restart. Now see what else can be gained from your new Kubernetes infrastructure.

Click Check my progress below to check your lab progress.

Create a Kubernetes Service

Task 6. Scale up your service

One of the powerful features offered by Kubernetes is how easy it is to scale your application. Suppose you suddenly need more capacity. You can tell the replication controller to manage a new number of replicas for your pod.

- Set the number of replicas for your pod:

kubectl scale deployment hello-node --replicas=4

Output:

deployment.extensions/hello-node scaled

- Request a description of the updated deployment:

kubectl get deployment

Output:

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 4/4 4 4 16m

Re-run the above command until you see all 4 replicas created.

- List all the pods:

kubectl get pods

This is the output you should see:

NAME READY STATUS RESTARTS AGE

hello-node-714049816-g4azy 1/1 Running 0 1m

hello-node-714049816-rk0u6 1/1 Running 0 1m

hello-node-714049816-sh812 1/1 Running 0 1m

hello-node-714049816-ztzrb 1/1 Running 0 16m

A declarative approach is being used here. Rather than starting or stopping new instances, you declare how many instances should be running at all times. Kubernetes reconciliation loops make sure that reality matches what you requested and takes action if needed.

Here's a diagram summarizing the state of your Kubernetes cluster:

Click Check my progress below to check your lab progress.

Scale up your service

Task 7. Roll out an upgrade to your service

At some point the application that you've deployed to production will require bug fixes or additional features. Kubernetes helps you deploy a new version to production without impacting your users.

- First, modify the application by opening

server.js:

vi server.js

i

- Then update the response message:

response.end("Hello Kubernetes World!");

- Save the

server.jsfile by pressing Esc then:

:wq

Now you can build and publish a new container image to the registry with an incremented tag (v2 in this case).

- Run the following commands, replacing

PROJECT_IDwith your lab project ID:

docker build -t hello-node:v2 .

docker tag hello-node:v2 {{{ project_0.default_region | YOUR_REGION }}}-docker.pkg.dev/{{{ project_0.project_id | YOUR_PROJECT_ID }}}/my-docker-repo/hello-node:v2

docker push {{{ project_0.default_region | YOUR_REGION }}}-docker.pkg.dev/{{{ project_0.project_id | YOUR_PROJECT_ID }}}/my-docker-repo/hello-node:v2

Note: Building and pushing this updated image should be quicker since caching is being taken advantage of.

Kubernetes will smoothly update your replication controller to the new version of the application. In order to change the image label for your running container, you will edit the existing hello-node deployment and change the image from pkg.dev/PROJECT_ID/hello-node:v1 to pkg.dev/PROJECT_ID/hello-node:v2.

- To do this, use the

kubectl editcommand:

kubectl edit deployment hello-node

It opens a text editor displaying the full deployment yaml configuration. It isn't necessary to understand the full yaml config right now, just understand that by updating the spec.template.spec.containers.image field in the config you are telling the deployment to update the pods with the new image.

- Look for

Spec>containers>imageand change the version number from v1 to v2:

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: 2016-03-24T17:55:28Z

generation: 3

labels:

run: hello-node

name: hello-node

namespace: default

resourceVersion: "151017"

selfLink: /apis/extensions/v1beta1/namespaces/default/deployments/hello-node

uid: 981fe302-f1e9-11e5-9a78-42010af00005

spec:

replicas: 4

selector:

matchLabels:

run: hello-node

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

run: hello-node

spec:

containers:

- image: pkg.dev/PROJECT_ID/hello-node:v1 ## Update this line ##

imagePullPolicy: IfNotPresent

name: hello-node

ports:

- containerPort: 8080

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

dnsPolicy: ClusterFirst

restartPolicy: Always

securityContext: {}

terminationGracePeriodSeconds: 30

- After making the change, save and close this file: Press ESC, then:

:wq

This is the output you should see:

deployment.extensions/hello-node edited

- Run the following to update the deployment with the new image:

kubectl get deployments

New pods will be created with the new image and the old pods will be deleted.

This is the output you should see (you may need to rerun the above command to see the following):

NAME READY UP-TO-DATE AVAILABLE AGE

hello-node 4/4 4 4 1h

While this is happening, the users of your services shouldn't see any interruption. After a little while they'll start accessing the new version of your application. You can find more details on rolling updates in the Performing a Rolling Update documentation.

Hopefully with these deployment, scaling, and updated features, once you've set up your Kubernetes Engine cluster, you'll agree that Kubernetes will help you focus on the application rather than the infrastructure.

Solution of Lab

Quick

curl -LO raw.githubusercontent.com/ePlus-DEV/storage/refs/heads/main/labs/GSP005/lab.sh

source lab.sh